glasses.models.segmentation package¶

Subpackages¶

Module contents¶

Segmantation models

- class glasses.models.segmentation.FPN(in_channels: int = 1, n_classes: int = 2, encoder: glasses.models.base.Encoder = <class 'glasses.models.classification.resnet.ResNetEncoder'>, decoder: torch.nn.modules.module.Module = <class 'glasses.models.segmentation.fpn.FPNDecoder'>, **kwargs)[source]¶

Bases:

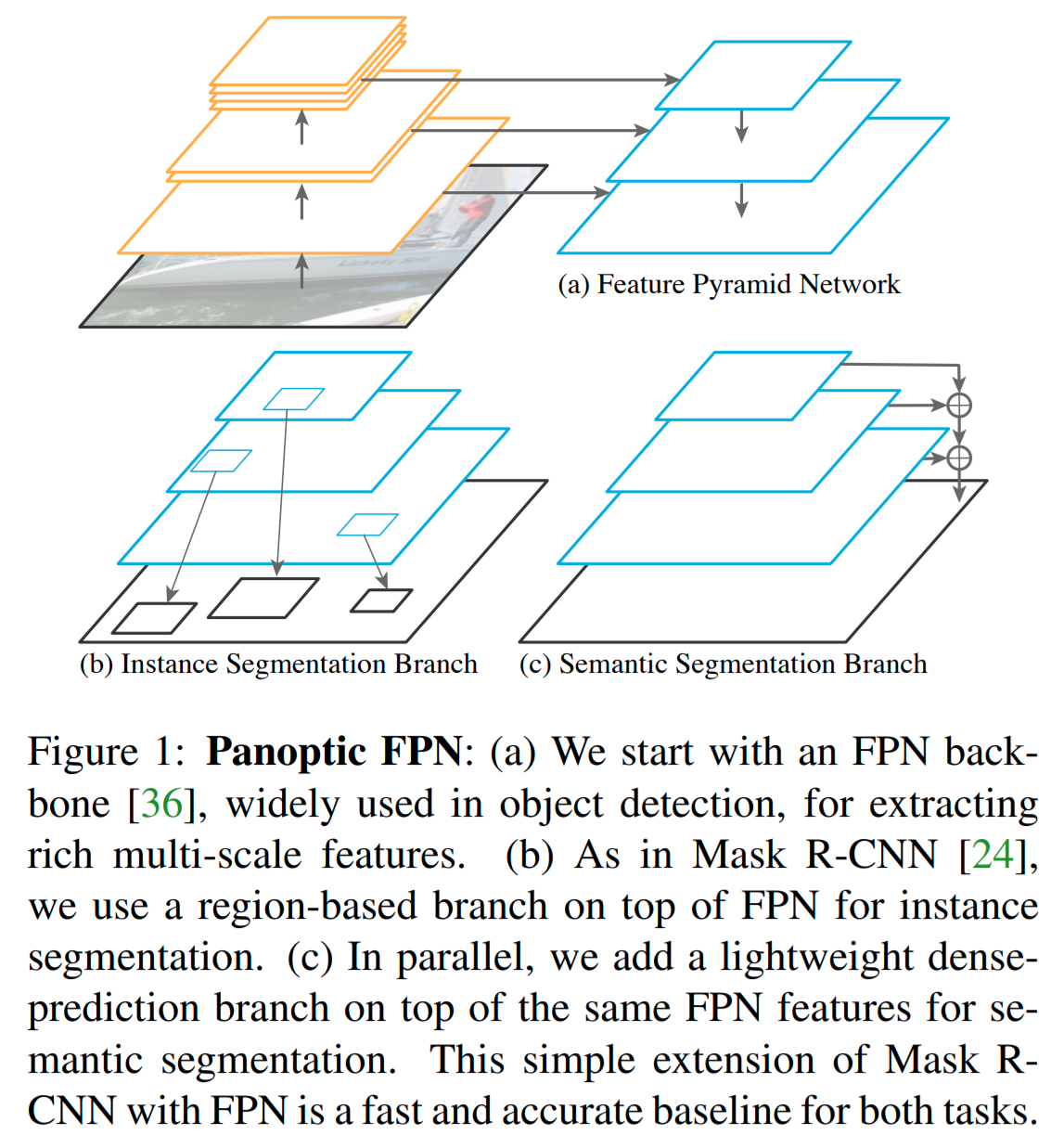

glasses.models.segmentation.base.SegmentationModuleImplementation of Feature Pyramid Networks proposed in Feature Pyramid Networks for Object Detection

Warning

This model should be used only to extract features from an image, the output is a vector of shape [B, N, <prediction_width>, \(S_i\), \(S_i\)]. Where \(S_i\) is the spatial shape of the \(i-th\) stage of the encoder. For image segmentation please use PFPN.

Examples

Default models

>>> FPN()

You can easily customize your model

>>> # change activation >>> FPN(activation=nn.SELU) >>> # change number of classes (default is 2 ) >>> FPN(n_classes=2) >>> # change encoder >>> FPN = FPN(encoder=lambda *args, **kwargs: ResNet.resnet26(*args, **kwargs).encoder,) >>> FPN = FPN(encoder=lambda *args, **kwargs: EfficientNet.efficientnet_b2(*args, **kwargs).encoder,) >>> # change decoder >>> FPN(decoder=partial(FPNDecoder, pyramid_width=64, prediction_width=32)) >>> # pass a different block to decoder >>> FPN(encoder=partial(ResNetEncoder, block=SENetBasicBlock)) >>> # all *Decoder class can be directly used >>> FPN = FPN(encoder=partial(ResNetEncoder, block=ResNetBottleneckBlock, depths=[2,2,2,2]))

- Parameters

in_channels (int, optional) – [description]. Defaults to 1.

n_classes (int, optional) – [description]. Defaults to 2.

encoder (Encoder, optional) – [description]. Defaults to ResNetEncoder.

ecoder (nn.Module, optional) – [description]. Defaults to FPNDecoder.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- training: bool¶

- class glasses.models.segmentation.PFPN(*args, n_classes: int = 2, decoder: torch.nn.modules.module.Module = functools.partial(<class 'glasses.models.segmentation.fpn.FPNDecoder'>, segmentation_branch=functools.partial(<class 'glasses.models.segmentation.fpn.FPNSegmentationBranch'>, layer=<class 'glasses.models.segmentation.fpn.PFPNSegmentationLayer'>)), **kwargs)[source]¶

Bases:

glasses.models.segmentation.fpn.FPNImplementation of Panoptic Feature Pyramid Networks proposed in Panoptic Feature Pyramid Networks

Basically, each features obtained from the segmentation branch is upsampled to match \(\frac{1}{4}\) of the input, in the ResNet case \(58\). Then, the features are merged by summing them to obtain a single vector that is upsampled to the input spatial shape.

Examples

Create a default model

>>> PFPN()

You can easily customize your model

>>> # change activation >>> PFPN(activation=nn.SELU) >>> # change number of classes (default is 2 ) >>> PFPN(n_classes=2) >>> # change encoder >>> pfpn = PFPN(encoder=lambda *args, **kwargs: ResNet.resnet26(*args, **kwargs).encoder,) >>> pfpn = PFPN(encoder=lambda *args, **kwargs: EfficientNet.efficientnet_b2(*args, **kwargs).encoder,) >>> # change decoder >>> PFPN(decoder=partial(PFPNDecoder, pyramid_width=64, prediction_width=32)) >>> # pass a different block to decoder >>> PFPN(encoder=partial(ResNetEncoder, block=SENetBasicBlock)) >>> # all *Decoder class can be directly used >>> pfpn = PFPN(encoder=partial(ResNetEncoder, block=ResNetBottleneckBlock, depths=[2,2,2,2]))

- Parameters

in_channels (int, optional) – [description]. Defaults to 1.

n_classes (int, optional) – [description]. Defaults to 2.

encoder (Encoder, optional) – [description]. Defaults to ResNetEncoder.

ecoder (nn.Module, optional) – [description]. Defaults to PFPNDecoder.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- training: bool¶

- class glasses.models.segmentation.UNet(in_channels: int = 1, n_classes: int = 2, encoder: glasses.models.base.Encoder = <class 'glasses.models.segmentation.unet.UNetEncoder'>, decoder: torch.nn.modules.module.Module = <class 'glasses.models.segmentation.unet.UNetDecoder'>, **kwargs)[source]¶

Bases:

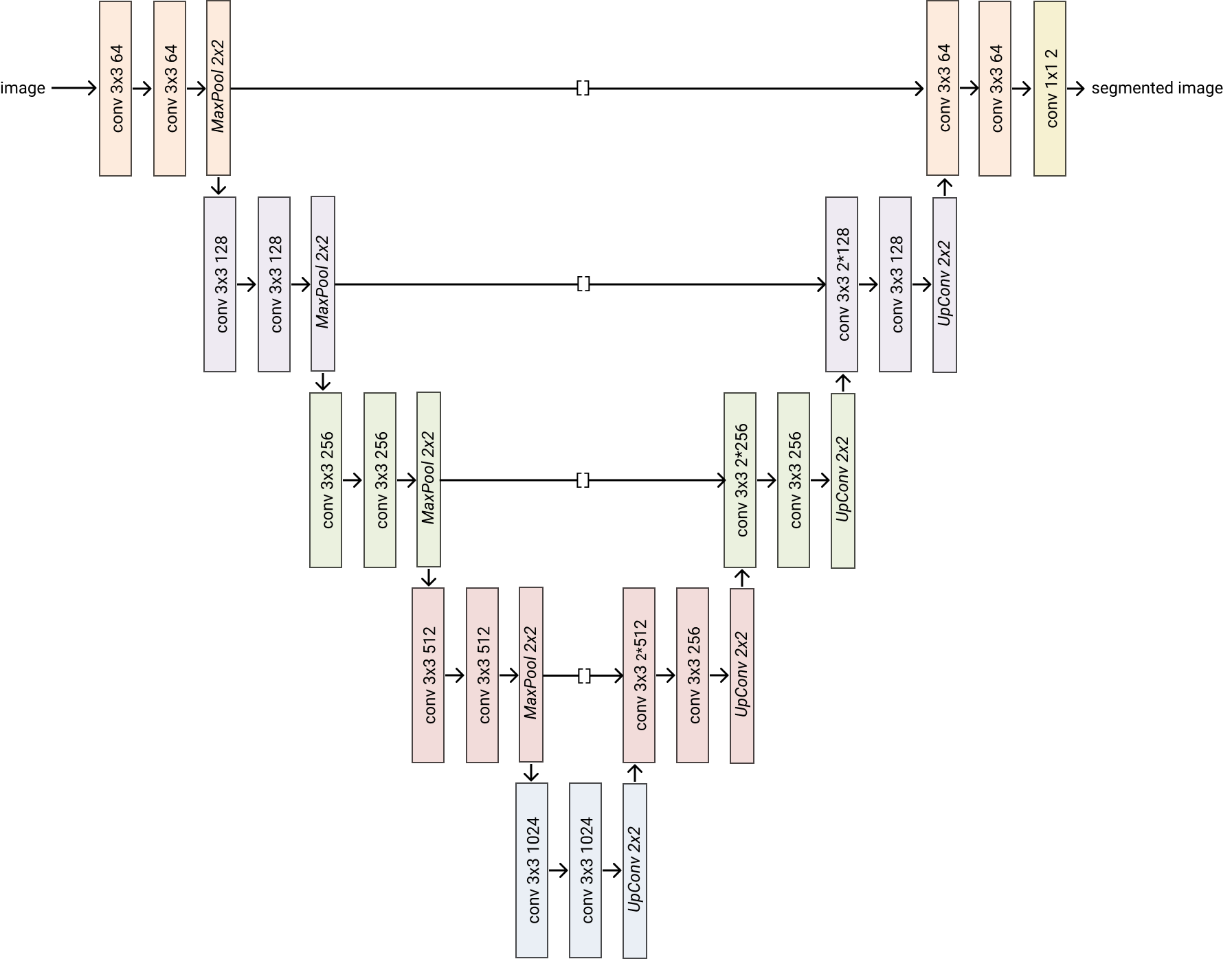

glasses.models.segmentation.base.SegmentationModuleImplementation of Unet proposed in U-Net: Convolutional Networks for Biomedical Image Segmentation

Examples

Default models

>>> UNet()

You can easily customize your model

>>> # change activation >>> UNet(activation=nn.SELU) >>> # change number of classes (default is 2 ) >>> UNet(n_classes=2) >>> # change encoder >>> unet = UNet(encoder=lambda *args, **kwargs: ResNet.resnet26(*args, **kwargs).encoder,) >>> unet = UNet(encoder=lambda *args, **kwargs: EfficientNet.efficientnet_b2(*args, **kwargs).encoder,) >>> # change decoder >>> UNet(decoder=partial(UNetDecoder, widths=[256, 128, 64, 32, 16])) >>> # pass a different block to decoder >>> UNet(encoder=partial(UNetEncoder, block=SENetBasicBlock)) >>> # all *Decoder class can be directly used >>> unet = UNet(encoder=partial(ResNetEncoder, block=ResNetBottleneckBlock, depths=[2,2,2,2]))

- Parameters

in_channels (int, optional) – [description]. Defaults to 1.

n_classes (int, optional) – [description]. Defaults to 2.

encoder (Encoder, optional) – [description]. Defaults to UNetEncoder.

ecoder (nn.Module, optional) – [description]. Defaults to UNetDecoder.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- training: bool¶