glasses.models.segmentation.fpn package¶

Module contents¶

- class glasses.models.segmentation.fpn.FPN(in_channels: int = 1, n_classes: int = 2, encoder: glasses.models.base.Encoder = <class 'glasses.models.classification.resnet.ResNetEncoder'>, decoder: torch.nn.modules.module.Module = <class 'glasses.models.segmentation.fpn.FPNDecoder'>, **kwargs)[source]¶

Bases:

glasses.models.segmentation.base.SegmentationModuleImplementation of Feature Pyramid Networks proposed in Feature Pyramid Networks for Object Detection

Warning

This model should be used only to extract features from an image, the output is a vector of shape [B, N, <prediction_width>, \(S_i\), \(S_i\)]. Where \(S_i\) is the spatial shape of the \(i-th\) stage of the encoder. For image segmentation please use PFPN.

Examples

Default models

>>> FPN()

You can easily customize your model

>>> # change activation >>> FPN(activation=nn.SELU) >>> # change number of classes (default is 2 ) >>> FPN(n_classes=2) >>> # change encoder >>> FPN = FPN(encoder=lambda *args, **kwargs: ResNet.resnet26(*args, **kwargs).encoder,) >>> FPN = FPN(encoder=lambda *args, **kwargs: EfficientNet.efficientnet_b2(*args, **kwargs).encoder,) >>> # change decoder >>> FPN(decoder=partial(FPNDecoder, pyramid_width=64, prediction_width=32)) >>> # pass a different block to decoder >>> FPN(encoder=partial(ResNetEncoder, block=SENetBasicBlock)) >>> # all *Decoder class can be directly used >>> FPN = FPN(encoder=partial(ResNetEncoder, block=ResNetBottleneckBlock, depths=[2,2,2,2]))

- Parameters

in_channels (int, optional) – [description]. Defaults to 1.

n_classes (int, optional) – [description]. Defaults to 2.

encoder (Encoder, optional) – [description]. Defaults to ResNetEncoder.

ecoder (nn.Module, optional) – [description]. Defaults to FPNDecoder.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- training: bool¶

- class glasses.models.segmentation.fpn.FPNDecoder(start_features: int = 512, pyramid_width: int = 256, prediction_width: int = 128, lateral_widths: Optional[List[int]] = None, segmentation_branch: torch.nn.modules.module.Module = <class 'glasses.models.segmentation.fpn.FPNSegmentationBranch'>, block: torch.nn.modules.module.Module = <class 'glasses.nn.blocks.ConvBnAct'>, **kwargs)[source]¶

Bases:

torch.nn.modules.module.ModuleFPN Decoder composed of several layer of upsampling layers aimed to decrease the features space and increase the resolution.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- forward(x: torch.Tensor, residuals: List[torch.Tensor]) torch.Tensor[source]¶

Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- training: bool¶

- class glasses.models.segmentation.fpn.FPNSegmentationBlock(in_features: int, out_features: int, block: torch.nn.modules.module.Module = <class 'glasses.nn.blocks.ConvBnAct'>, **kwargs)[source]¶

Bases:

torch.nn.modules.module.ModuleFPN segmentation (smooth) layer used to smooth and upsample the decoder features

- Parameters

in_features (int) – [description]

out_features (int) – [description]

block (nn.Module, optional) – [description]. Defaults to ConvBnAct.

- forward(x: torch.Tensor) torch.Tensor[source]¶

Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- training: bool¶

- class glasses.models.segmentation.fpn.FPNSegmentationBranch(in_features: int = 256, out_features: int = 128, depth: int = 3, layer=<class 'glasses.models.segmentation.fpn.FPNSegmentationLayer'>, block: torch.nn.modules.module.Module = <class 'glasses.nn.blocks.ConvBnAct'>, **kwargs)[source]¶

Bases:

torch.nn.modules.module.ModuleInitializes internal Module state, shared by both nn.Module and ScriptModule.

- forward(x: torch.Tensor, residuals: List[torch.Tensor]) torch.Tensor[source]¶

Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- training: bool¶

- class glasses.models.segmentation.fpn.FPNSegmentationLayer(*args, depth: int = 0, **kwargs)[source]¶

Bases:

glasses.models.segmentation.fpn.PFPNSegmentationLayerInitializes internal Module state, shared by both nn.Module and ScriptModule.

- class glasses.models.segmentation.fpn.FPNUpLayer(in_features: int, out_features: int, block: torch.nn.modules.module.Module = <class 'glasses.nn.blocks.ConvBnAct'>, upsample: bool = True, **kwargs)[source]¶

Bases:

torch.nn.modules.module.ModuleFPN up layer (right side).

- Parameters

out_features (int) – Number of input features

out_features – Number of output features

block (nn.Module, optional) – Block used. Defaults to UpBlock.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- forward(x: torch.Tensor, res: torch.Tensor) torch.Tensor[source]¶

Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- training: bool¶

- class glasses.models.segmentation.fpn.Merge(policy: str = 'sum')[source]¶

Bases:

torch.nn.modules.module.ModuleThis layer merges all the features by summing them.

- Parameters

policy (str, optional) – [description]. Defaults to ‘sum’.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- forward(features)[source]¶

Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- training: bool¶

- class glasses.models.segmentation.fpn.PFPN(*args, n_classes: int = 2, decoder: torch.nn.modules.module.Module = functools.partial(<class 'glasses.models.segmentation.fpn.FPNDecoder'>, segmentation_branch=functools.partial(<class 'glasses.models.segmentation.fpn.FPNSegmentationBranch'>, layer=<class 'glasses.models.segmentation.fpn.PFPNSegmentationLayer'>)), **kwargs)[source]¶

Bases:

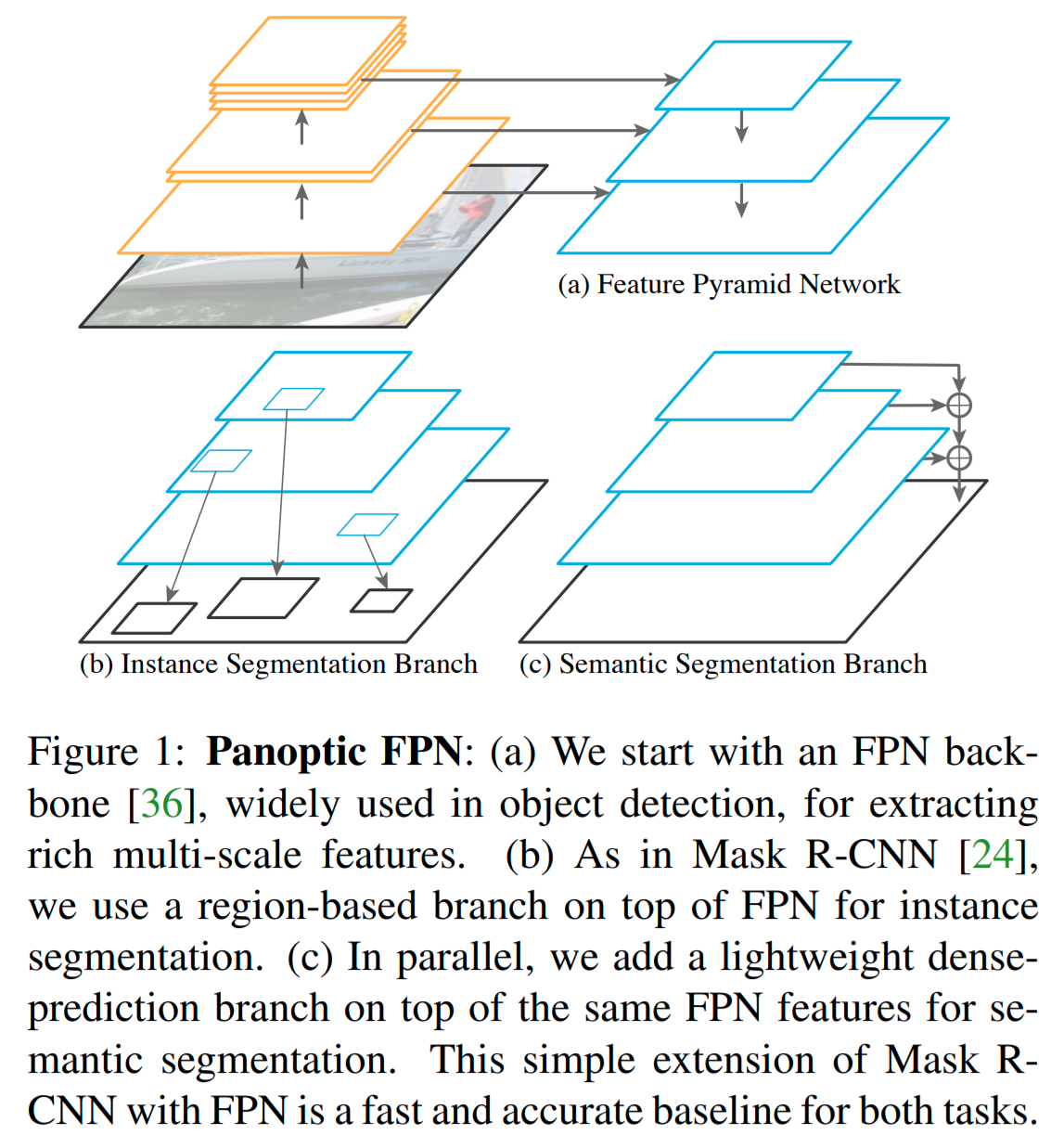

glasses.models.segmentation.fpn.FPNImplementation of Panoptic Feature Pyramid Networks proposed in Panoptic Feature Pyramid Networks

Basically, each features obtained from the segmentation branch is upsampled to match \(\frac{1}{4}\) of the input, in the ResNet case \(58\). Then, the features are merged by summing them to obtain a single vector that is upsampled to the input spatial shape.

Examples

Create a default model

>>> PFPN()

You can easily customize your model

>>> # change activation >>> PFPN(activation=nn.SELU) >>> # change number of classes (default is 2 ) >>> PFPN(n_classes=2) >>> # change encoder >>> pfpn = PFPN(encoder=lambda *args, **kwargs: ResNet.resnet26(*args, **kwargs).encoder,) >>> pfpn = PFPN(encoder=lambda *args, **kwargs: EfficientNet.efficientnet_b2(*args, **kwargs).encoder,) >>> # change decoder >>> PFPN(decoder=partial(PFPNDecoder, pyramid_width=64, prediction_width=32)) >>> # pass a different block to decoder >>> PFPN(encoder=partial(ResNetEncoder, block=SENetBasicBlock)) >>> # all *Decoder class can be directly used >>> pfpn = PFPN(encoder=partial(ResNetEncoder, block=ResNetBottleneckBlock, depths=[2,2,2,2]))

- Parameters

in_channels (int, optional) – [description]. Defaults to 1.

n_classes (int, optional) – [description]. Defaults to 2.

encoder (Encoder, optional) – [description]. Defaults to ResNetEncoder.

ecoder (nn.Module, optional) – [description]. Defaults to PFPNDecoder.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- training: bool¶

- glasses.models.segmentation.fpn.PFPNDecoder = functools.partial(<class 'glasses.models.segmentation.fpn.FPNDecoder'>, segmentation_branch=functools.partial(<class 'glasses.models.segmentation.fpn.FPNSegmentationBranch'>, layer=<class 'glasses.models.segmentation.fpn.PFPNSegmentationLayer'>))¶

Panoptic FPN Decoder that uses

PFPNSegmentationBranch()as segmentation branch

- glasses.models.segmentation.fpn.PFPNSegmentationBranch = functools.partial(<class 'glasses.models.segmentation.fpn.FPNSegmentationBranch'>, layer=<class 'glasses.models.segmentation.fpn.PFPNSegmentationLayer'>)¶

Panoptic FPN Segmentation Branch that upsample every features to match \(\frac{1}{4}\) of the spatial dimension of the input