glasses.models.classification.resnet package¶

Module contents¶

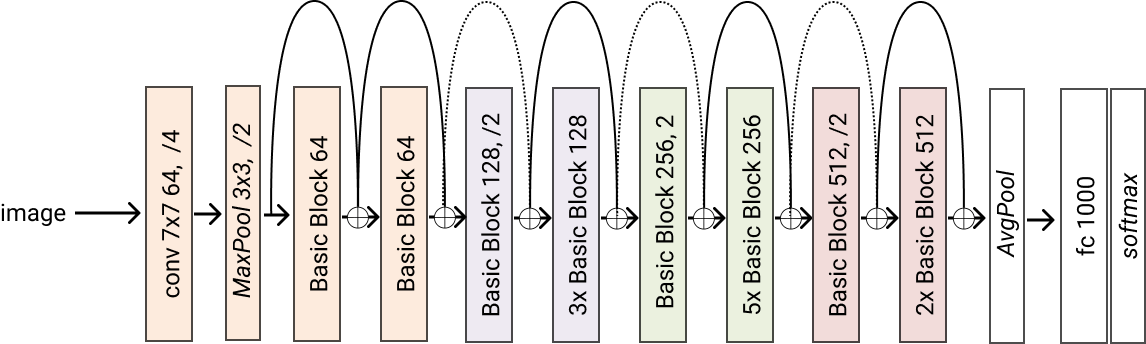

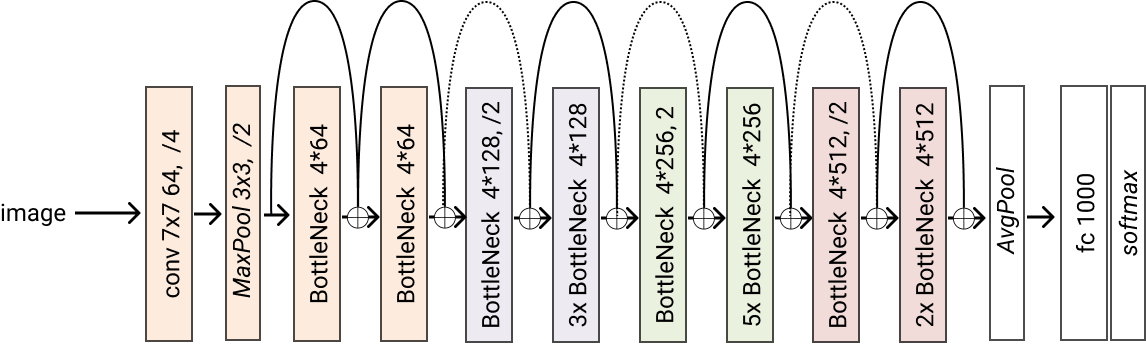

- class glasses.models.classification.resnet.ResNet(encoder: torch.nn.modules.module.Module = <class 'glasses.models.classification.resnet.ResNetEncoder'>, head: torch.nn.modules.module.Module = <class 'glasses.models.classification.resnet.ResNetHead'>, **kwargs)[source]¶

Bases:

glasses.models.classification.base.ClassificationModuleImplementation of ResNet proposed in Deep Residual Learning for Image Recognition

ResNet.resnet18() ResNet.resnet26() ResNet.resnet34() ResNet.resnet50() ResNet.resnet101() ResNet.resnet152() ResNet.resnet200() Variants (d) proposed in `Bag of Tricks for Image Classification with Convolutional Neural Networks <https://arxiv.org/pdf/1812.01187.pdf>`_ ResNet.resnet26d() ResNet.resnet34d() ResNet.resnet50d() # You can construct your own one by chaning `stem` and `block` resnet101d = ResNet.resnet101(stem=ResNetStemC, block=partial(ResNetBottleneckBlock, shortcut=ResNetShorcutD))

Examples

# change activation ResNet.resnet18(activation = nn.SELU) # change number of classes (default is 1000 ) ResNet.resnet18(n_classes=100) # pass a different block ResNet.resnet18(block=SENetBasicBlock) # change the steam model = ResNet.resnet18(stem=ResNetStemC) change shortcut model = ResNet.resnet18(block=partial(ResNetBasicBlock, shortcut=ResNetShorcutD)) # store each feature x = torch.rand((1, 3, 224, 224)) # get features model = ResNet.resnet18() # first call .features, this will activate the forward hooks and tells the model you'll like to get the features model.encoder.features model(torch.randn((1,3,224,224))) # get the features from the encoder features = model.encoder.features print([x.shape for x in features]) #[torch.Size([1, 64, 112, 112]), torch.Size([1, 64, 56, 56]), torch.Size([1, 128, 28, 28]), torch.Size([1, 256, 14, 14])]

- Parameters

in_channels (int, optional) – Number of channels in the input Image (3 for RGB and 1 for Gray). Defaults to 3.

n_classes (int, optional) – Number of classes. Defaults to 1000.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

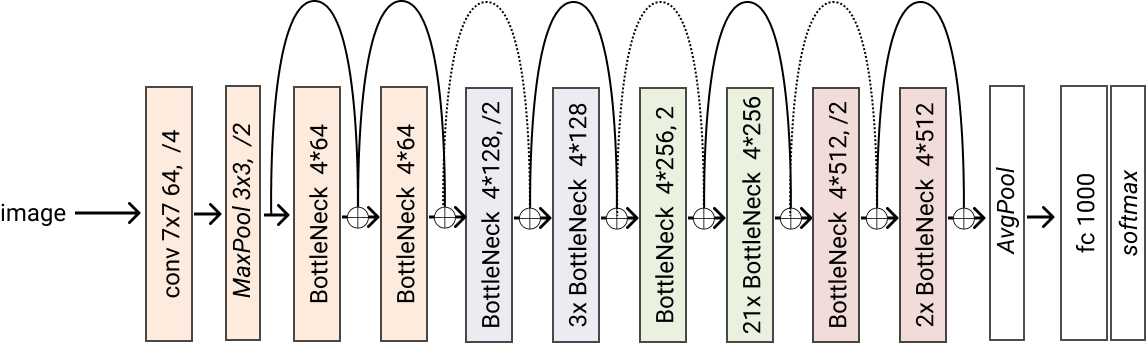

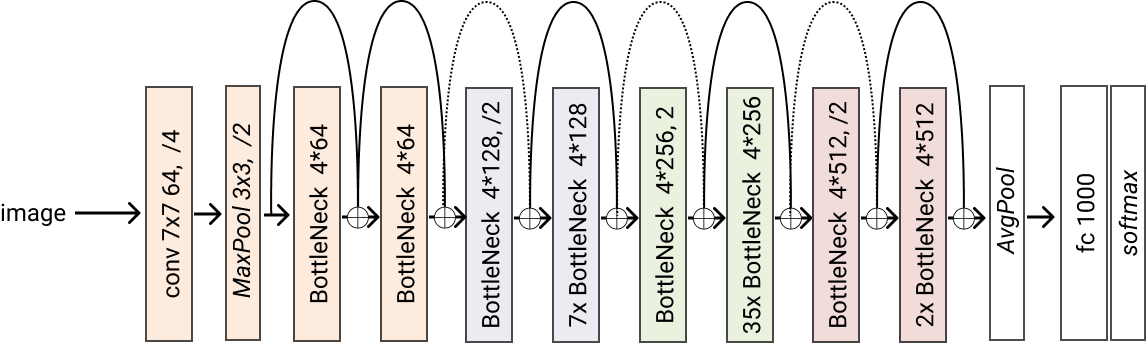

- classmethod resnet101(*args, block=<class 'glasses.models.classification.resnet.ResNetBottleneckBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet101 model

- Returns

A resnet101 model

- Return type

- classmethod resnet152(*args, block=<class 'glasses.models.classification.resnet.ResNetBottleneckBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet152 model

- Returns

A resnet152 model

- Return type

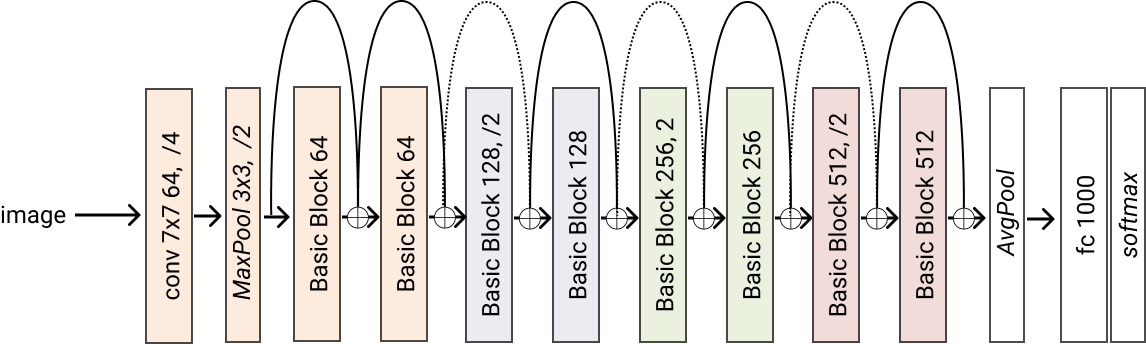

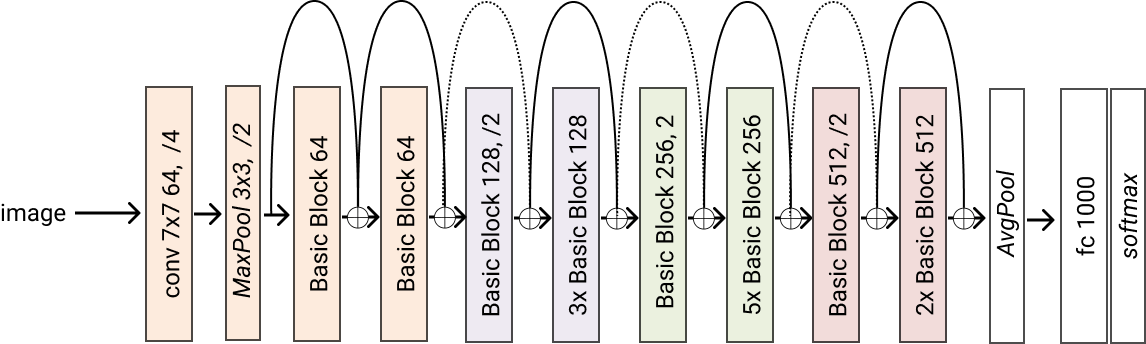

- classmethod resnet18(*args, block=<class 'glasses.models.classification.resnet.ResNetBasicBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet18 model

- Returns

A resnet18 model

- Return type

- classmethod resnet200(*args, block=<class 'glasses.models.classification.resnet.ResNetBottleneckBlock'>, **kwargs)[source]¶

Creates a resnet200 model

- Returns

A resnet200 model

- Return type

- classmethod resnet26(*args, block=<class 'glasses.models.classification.resnet.ResNetBottleneckBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet26 model

- Returns

A resnet26 model

- Return type

- classmethod resnet26d(*args, block=<class 'glasses.models.classification.resnet.ResNetBottleneckBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet26d model

- Returns

A resnet26d model

- Return type

- classmethod resnet34(*args, block=<class 'glasses.models.classification.resnet.ResNetBasicBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet34 model

- Returns

A resnet34 model

- Return type

- classmethod resnet34d(*args, block=<class 'glasses.models.classification.resnet.ResNetBasicBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet34 model

- Returns

A resnet34 model

- Return type

- classmethod resnet50(*args, block=<class 'glasses.models.classification.resnet.ResNetBottleneckBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet50 model

- Returns

A resnet50 model

- Return type

- classmethod resnet50d(*args, block=<class 'glasses.models.classification.resnet.ResNetBottleneckBlock'>, **kwargs) glasses.models.classification.resnet.ResNet[source]¶

Creates a resnet50d model

- Returns

A resnet50d model

- Return type

- training: bool¶

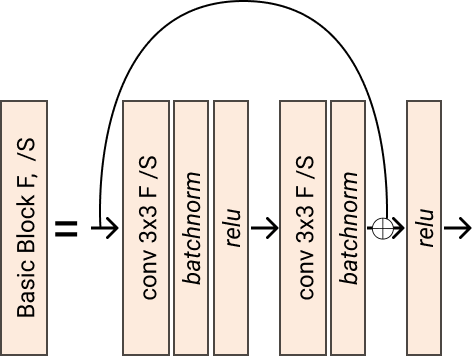

- class glasses.models.classification.resnet.ResNetBasicBlock(in_features: int, out_features: int, activation: torch.nn.modules.module.Module = functools.partial(<class 'torch.nn.modules.activation.ReLU'>, inplace=True), stride: int = 1, shortcut: torch.nn.modules.module.Module = <class 'glasses.models.classification.resnet.ResNetShorcut'>, **kwargs)[source]¶

Bases:

torch.nn.modules.module.ModuleBasic ResNet block composed by two 3x3 convs with residual connection.

The residual connection is showed as a black line

The output of the layer is defined as:

\(x' = F(x) + x\)

- Parameters

out_features (int) – Number of input features

out_features – Number of output features

activation (nn.Module, optional) – [description]. Defaults to ReLUInPlace.

stride (int, optional) – [description]. Defaults to 1.

conv (nn.Module, optional) – [description]. Defaults to nn.Conv2d.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- forward(x: torch.Tensor) torch.Tensor[source]¶

Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- training: bool¶

- class glasses.models.classification.resnet.ResNetBasicPreActBlock(in_features: int, out_features: int, activation: torch.nn.modules.module.Module = functools.partial(<class 'torch.nn.modules.activation.ReLU'>, inplace=True), stride: int = 1, shortcut: torch.nn.modules.module.Module = <class 'glasses.models.classification.resnet.ResNetShorcut'>, **kwargs)[source]¶

Bases:

glasses.models.classification.resnet.ResNetBasicBlockInitializes internal Module state, shared by both nn.Module and ScriptModule.

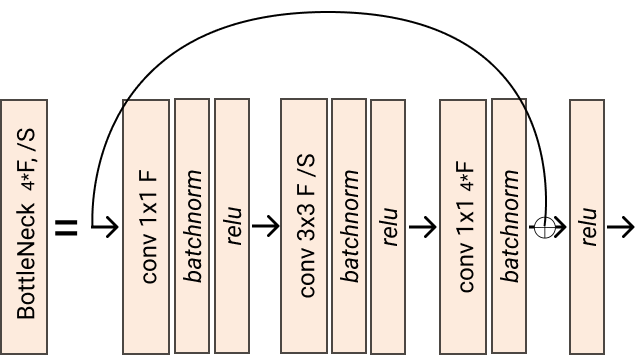

- class glasses.models.classification.resnet.ResNetBottleneckBlock(in_features: int, out_features: int, features: Optional[int] = None, activation: torch.nn.modules.module.Module = functools.partial(<class 'torch.nn.modules.activation.ReLU'>, inplace=True), reduction: int = 4, stride=1, shortcut=<class 'glasses.models.classification.resnet.ResNetShorcut'>, **kwargs)[source]¶

Bases:

glasses.models.classification.resnet.ResNetBasicBlockResNet Bottleneck block based on the torchvision implementation.

Even if the paper says that the first conv1x1 compresses the features. We followed the original implementation that expands the out_features by a factor equal to reduction.

The stride is applied into the 3x3 conv, this improves https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch

The residual connection is showed as a black line

- Parameters

out_features (int) – Number of input features

out_features – Number of output features

activation (nn.Module, optional) – [description]. Defaults to ReLUInPlace.

stride (int, optional) – [description]. Defaults to 1.

conv (nn.Module, optional) – [description]. Defaults to nn.Conv2d.

reduction (int, optional) – [description]. Defaults to 4.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- training: bool¶

- class glasses.models.classification.resnet.ResNetBottleneckPreActBlock(in_features: int, out_features: int, features: Optional[int] = None, activation: torch.nn.modules.module.Module = functools.partial(<class 'torch.nn.modules.activation.ReLU'>, inplace=True), reduction: int = 4, stride=1, shortcut=<class 'glasses.models.classification.resnet.ResNetShorcut'>, **kwargs)[source]¶

Bases:

glasses.models.classification.resnet.ResNetBottleneckBlockInitializes internal Module state, shared by both nn.Module and ScriptModule.

- reduction: int = 4¶

//arxiv.org/pdf/1603.05027.pdf>`_

- Parameters

out_features (int) – Number of input features

out_features – Number of output features

activation (nn.Module, optional) – [description]. Defaults to ReLUInPlace.

stride (int, optional) – [description]. Defaults to 1.

conv (nn.Module, optional) – [description]. Defaults to nn.Conv2d.

- Type

Pre activation ResNet bottleneck block proposed in `Identity Mappings in Deep Residual Networks <https

- class glasses.models.classification.resnet.ResNetEncoder(in_channels: int = 3, start_features: int = 64, widths: List[int] = [64, 128, 256, 512], depths: List[int] = [2, 2, 2, 2], activation: torch.nn.modules.module.Module = functools.partial(<class 'torch.nn.modules.activation.ReLU'>, inplace=True), block: torch.nn.modules.module.Module = <class 'glasses.models.classification.resnet.ResNetBasicBlock'>, stem: torch.nn.modules.module.Module = <class 'glasses.models.classification.resnet.ResNetStem'>, downsample_first: bool = False, **kwargs)[source]¶

Bases:

glasses.models.base.EncoderResNet encoder composed by increasing different layers with increasing features.

- Parameters

in_channels (int, optional) – [description]. Defaults to 3.

start_features (int, optional) – [description]. Defaults to 64.

widths (List[int], optional) – [description]. Defaults to [64, 128, 256, 512].

depths (List[int], optional) – [description]. Defaults to [2, 2, 2, 2].

activation (nn.Module, optional) – [description]. Defaults to ReLUInPlace.

block (nn.Module, optional) – Block used, there are several ones such as ResNetBasicBlock and ResNetBottleneckBlock . Defaults to ResNetBasicBlock.

stem (nn.Module, optional) – Stem used. Defaults to ResNetStem.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- property features_widths¶

- forward(x)[source]¶

Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- property stages¶

- training: bool¶

- class glasses.models.classification.resnet.ResNetHead(in_features: int, n_classes: int)[source]¶

Bases:

torch.nn.modules.container.SequentialThis class represents the tail of ResNet. It performs a global pooling and maps the output to the correct class by using a fully connected layer.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- class glasses.models.classification.resnet.ResNetLayer(in_features: int, out_features: int, block: torch.nn.modules.module.Module = <class 'glasses.models.classification.resnet.ResNetBasicBlock'>, depth: int = 1, stride: int = 2, **kwargs)[source]¶

Bases:

torch.nn.modules.container.SequentialInitializes internal Module state, shared by both nn.Module and ScriptModule.

- class glasses.models.classification.resnet.ResNetShorcut(in_features: int, out_features: int, stride: int = 2)[source]¶

Bases:

torch.nn.modules.container.SequentialShorcut function applied by ResNet to upsample the channel when residual and output features do not match

- Parameters

in_features (int) – features (channels) of the input

out_features (int) – features (channels) of the desidered output

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- class glasses.models.classification.resnet.ResNetShorcutD(in_features: int, out_features: int, stride: int = 2)[source]¶

Bases:

torch.nn.modules.container.SequentialShorcut function proposed in Bag of Tricks for Image Classification with Convolutional Neural Networks

It applies average pool instead of stride=2 in the convolution layer

- Parameters

in_features (int) – features (channels) of the input

out_features (int) – features (channels) of the desidered output

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- class glasses.models.classification.resnet.ResNetStem(in_features: int, out_features: int, activation: torch.nn.modules.module.Module = functools.partial(<class 'torch.nn.modules.activation.ReLU'>, inplace=True))[source]¶

Bases:

torch.nn.modules.container.SequentialInitializes internal Module state, shared by both nn.Module and ScriptModule.

- class glasses.models.classification.resnet.ResNetStem3x3(in_features: int, out_features: int, widths: List[int] = [32, 32], activation: torch.nn.modules.module.Module = <class 'torch.nn.modules.activation.ReLU'>)[source]¶

Bases:

torch.nn.modules.container.SequentialModified stem proposed in Bag of Tricks for Image Classification with Convolutional Neural Networks

The observation is that the computational cost of a convolution is quadratic to the kernel width or height. A 7 × 7 convolution is 5.4 times more expensive than a 3 × 3 convolution. So this tweak replacing the 7 × 7 convolution in the input stem with three conservative 3 × 3 convolution.

- Parameters

in_features (int) – [description]

out_features (int) – [description]

widths (List[int], optional) – Widths of the inner stem. Defaults to [32, 32], 32 - in, 32 - out.

activation (nn.Module, optional) – [description]. Defaults to nn.ReLU.

Initializes internal Module state, shared by both nn.Module and ScriptModule.